MAGIC CAMERA

2024

In a world where technology is often being used to replace human labor in existing tasks, how can we leverage our tools to create new experiences that would not otherwise be possible? This project reimagines the nature of photography; while a traditional camera simply captures a snapshot of what the eye sees, we envision a tool that instead transforms the perceived world.

Our camera uses generative image models to create alternate realities from the photos it takes. Images are captured with a Raspberry Pi camera module, then processed through inference with cloud-hosted models.

The tangible nature of the camera is a departure from our typical pattern of interactions with machine learning models behind a screen and keyboard. When users take the camera out into the world, they can iteratively experiment with models in a rapid feedback loop and co-create with the technology in an intuitive, conversation-like interaction.

We found that users adapted their approach to taking photos as they experimented with the camera. Rather than hunting for visually striking scenes, they began to think in terms of underlying patterns and structures, seeking out subjects for their potential for transformation.

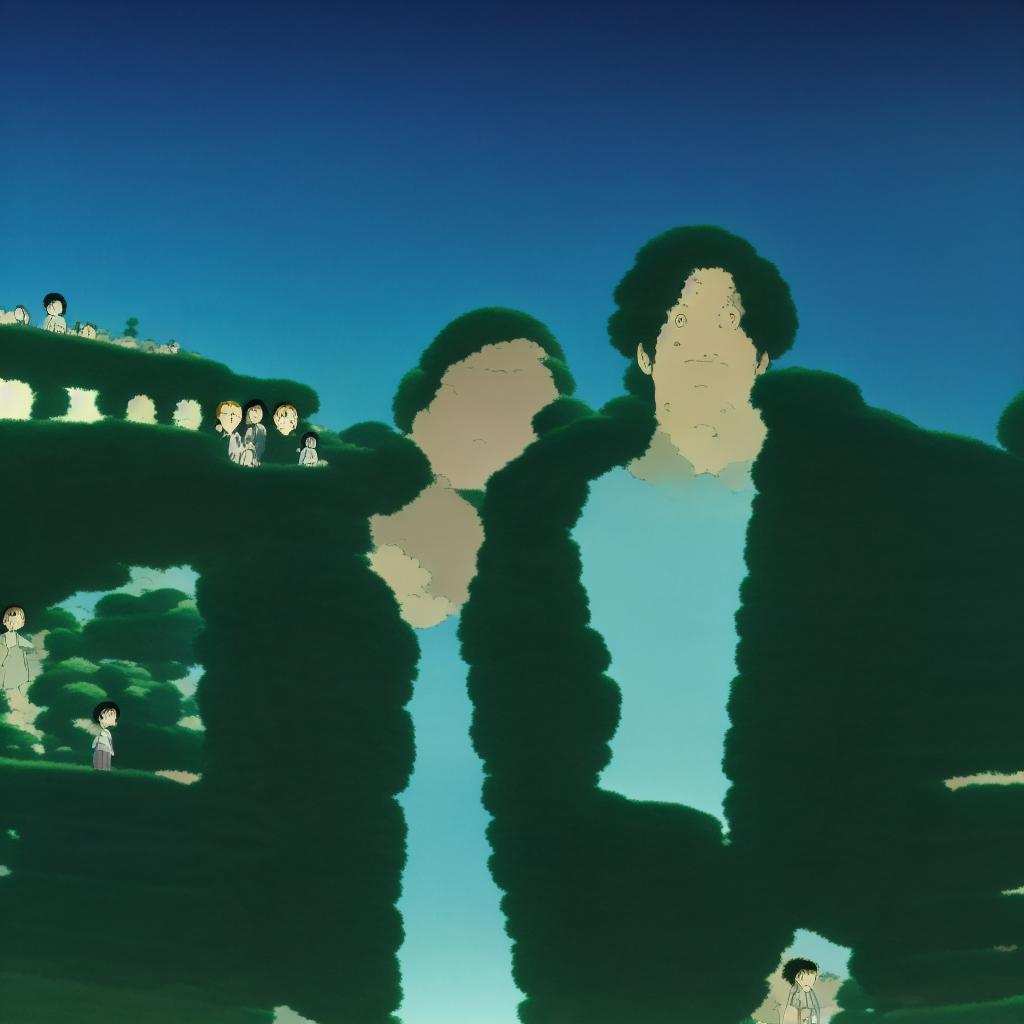

We use InstructPix2Pix, a conditional diffusion model for image editing from language instructions, to perform one-shot style transfer on photos. Shown above are Studio Ghibli (left) and watercolor (right) style.

We deconstruct an image to object-level spatial and semantic information, then reconstruct a new scene with the same arrangement of objects. We use Detic for open-vocabulary object detection, and GLIGEN for grounded generation.

This project was a collaboration with Eshaan Moorjani, Annie Zhang, Arjun Banerjee, Dhruv Gautam, Erica Liu, Selena Zhao, and Sophie Xie.

Launchpad

Berkeley, CA